Online Students Use AI…and Want More. What Should You Do?

The pace at which AI is moving into our lives is nothing short of extraordinary. In less than 24 months, AI has shifted from novelty to necessity. Students aren’t merely experimenting with ChatGPT or Google Gemini anymore—they’re integrating AI into research, writing, studying, and even the way they search for programs. As higher ed leaders navigate policy, pedagogy, and student support, the question isn’t if AI belongs in the online learning experience, but how to integrate it responsibly and effectively.

My 2024 RNL study of prospective and enrolled online students asked a number of questions about AI use – in both search and curriculum – and I wondered how “dated” it would feel, given how fast things are moving. The survey was put in the field in February 2024, so how do the data hold up 18 months later? Let’s see:

What we learned in the RNL study

We asked questions about the regularity of AI use, the use of AI-driven chatbots in their search, and the curriculum elements and areas where they would most like to have AI deployed. Here are the highlights.

Usage by frequency: Daily engagement with AI tools like ChatGPT is nearly identical among online undergrads (14%) and graduate students (12%). Weekly usage is even higher: 33% and 34%, respectively.

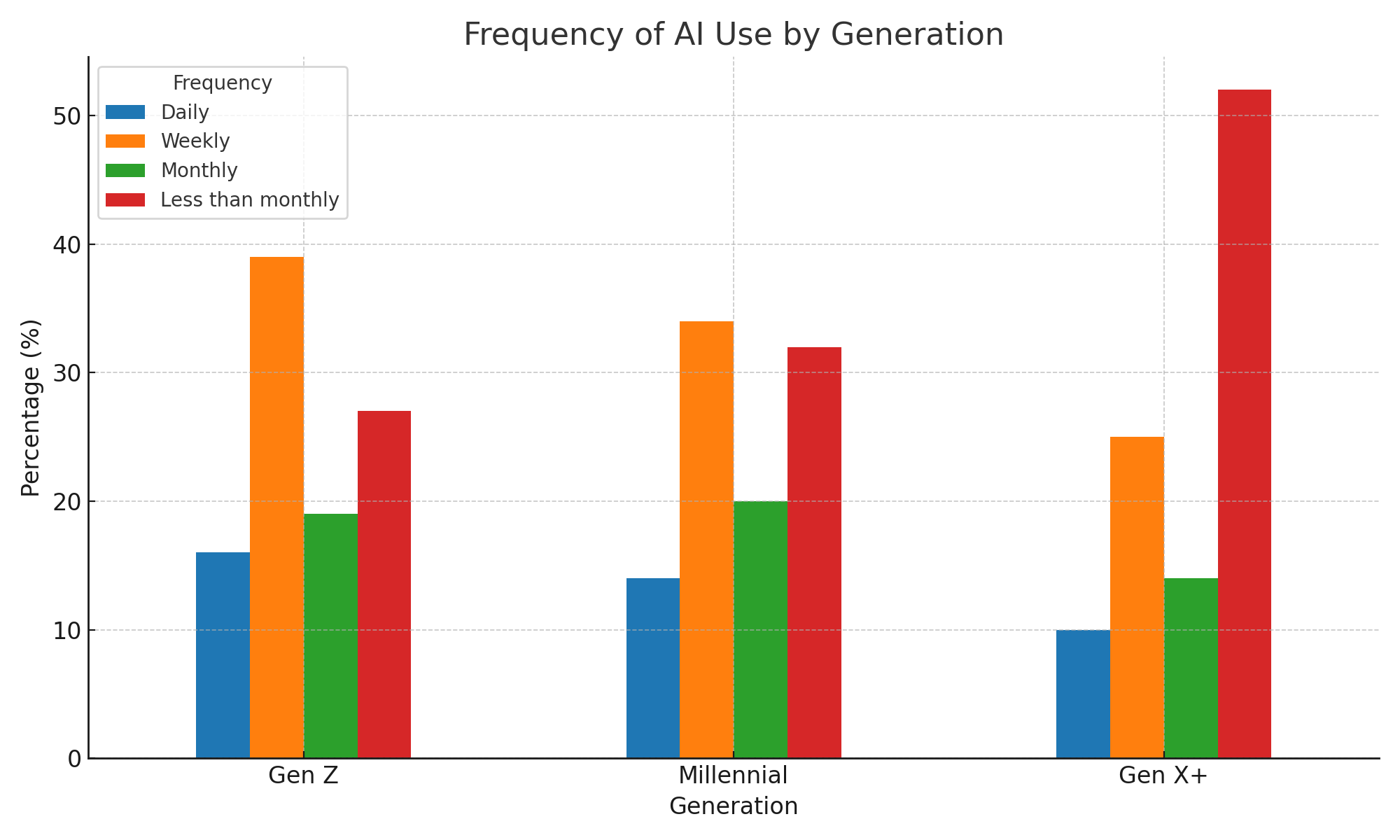

Generational breakdown: Usage skews younger—16% of Gen Z students use AI daily, compared to just 10% of GenX+. Weekly use stands at 39% for Gen Z and only 25% for GenX+.

Data from RNL's 2024 Online Student Recruitment Report - AI use by generation.

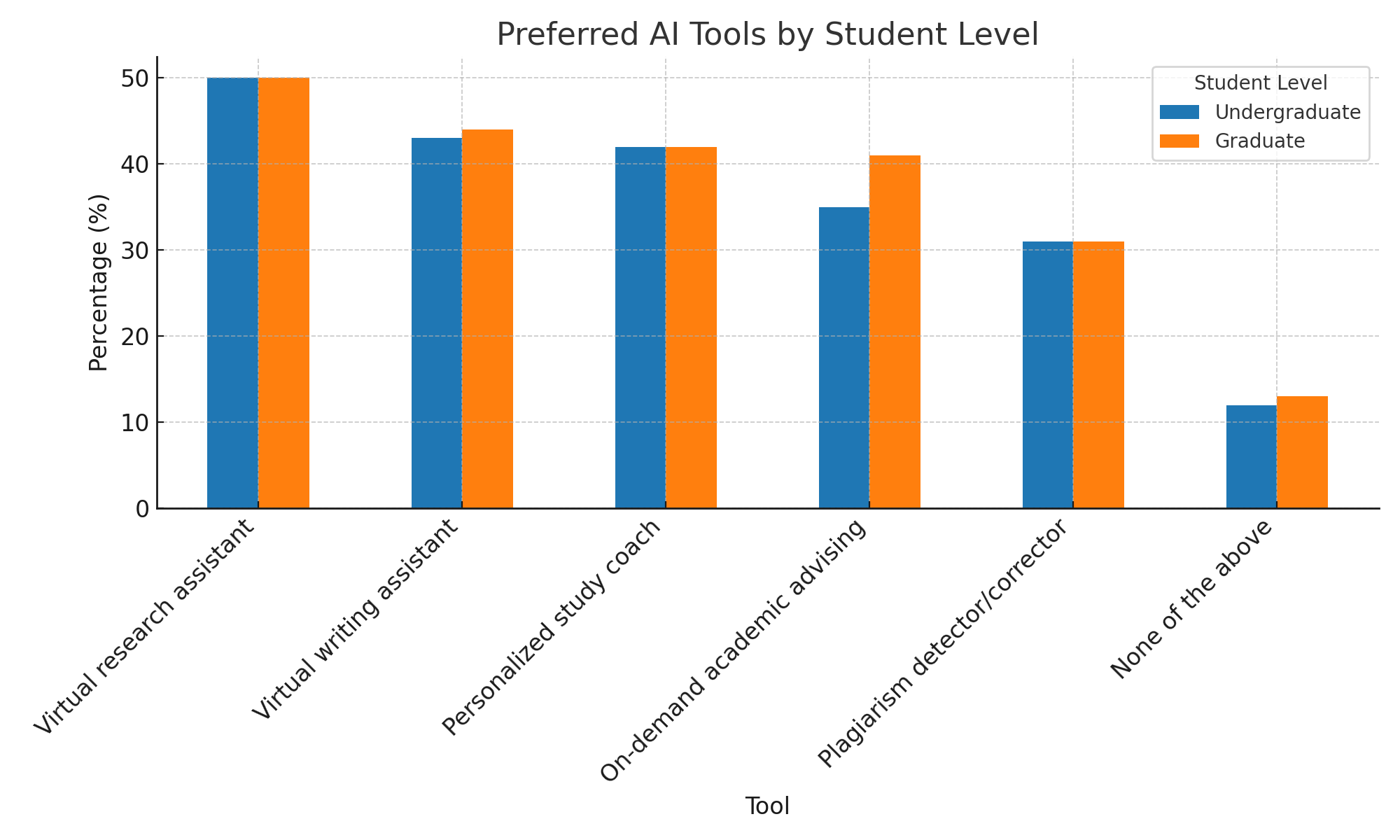

Preferred AI tools for coursework: Half of both undergraduate and graduate online students want a virtual research assistant. Nearly 45% would use virtual writing assistants and personalized study coaches. Graduate students are more interested (41%) in on-demand academic advising bots than their undergraduate counterparts.

Data from RNL's 2024 Online Student Recruitment Report - Preferred uses of AI in coursework.

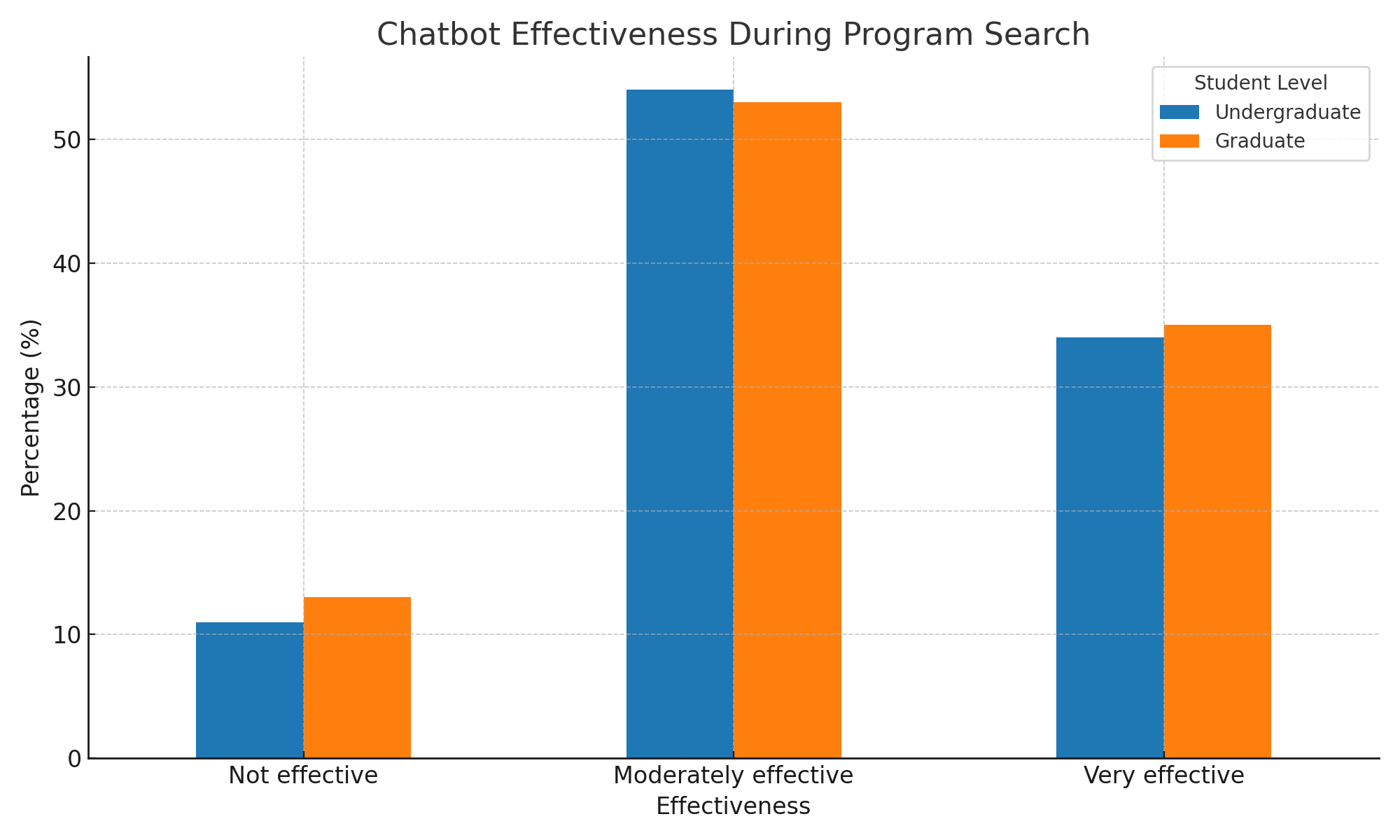

Chatbot usage during program search: A surprising 79% of undergrads and 78% of graduate students used AI-driven chatbots during their search for an online program.

Chatbot effectiveness: Roughly one-third found them very effective; more than half rated them as moderately effective. Gen Z students were most positive—32% rated them very effective, compared to only 23% of GenX+.

Data from RNL's 2024 Online Student Recruitment Report - Rating of AI-chatbot effectiveness.

Taken together, these figures signal that AI isn’t just present—it’s powerful, popular, and potentially transformational.

What has been learned since then

A lot of the research that has been since the RNL study has both continued to document the ever-increasing use of AI but also delve into how students are using the technology. Several of these studies note that there is considerable concern among students that their use of AI in their actual coursework will trigger charges of plagiarism and as such there is some concern that the numbers related to such use may be lower than is actually happening. Here are some important developments:

Widespread adoption: A 2025 survey by HEPI and Kortext (UK) shows 92% of students now use AI in their studies—up from 66% just a year earlier.

High-frequency use: IN June 2025, Campus Technology reported that nationwide, 30% of students report

daily AI use, and 42% use it weekly.

Study tasks inside coursework. The HEPI/Kortext survey, indicates that students most often use AI to explain concepts (58%), summarize articles (now the #2 use), and suggest research ideas. About one quarter use AI-generated text to help draft assignments, and 18% report directly including AI-generated text in submitted work.

Concept mastery and study improvement. Chegg’s survey of undergraduates indicates that 56% say their

primary use is to understand concepts/subjects; 50% report improved understanding of complex concepts; 49% say it has improved their ability to complete assignments; 41% say it helps them organize workload. (Global, 15 countries.)

Substitution effects. A Harvard undergraduate survey found that for roughly 25% of users, GenAI has begun to substitute for office hours and completing required readings—a strong signal of how deeply AI is being woven into day-to-day study behavior.

Concerns & guardrails students want. In a Microsoft survey focused on AI use at all levels of education, top worries include accuracy (53% among GenAI users, global), hallucinations/inaccuracies (51%, UK), and being accused of plagiarism (33%, U.S.). Students also say they want clear policies and training—only ~36% report receiving formal support from their institution (UK).

Five actions for online program leaders

Put AI where students already want it: research, writing, and study coaching.

The most in-demand tools are virtual research assistants, writing assistants, and personalized study coaches (≈42–50% interest across levels). Prioritize pilots that integrate these functions into LMS workflows and program hubs. Pair tool rollout with clear guidance on appropriate use—honoring Roger Lee’s “guardrails, not shutdowns” framing.

Treat chatbots as a primary front door—then make them smarter.

With ~80% of prospects using chatbots during their search, ensure your bot can handle program-specific FAQs, deadlines, cost/aid basics, and next steps. For graduate audiences (41% interest in on-demand advising), enable guided pathways that triage to an advisor or scheduler when complexity rises. Track resolution rate and handoff quality, not just engagement.

Segment AI support by generation and intent.

Gen Z uses AI more frequently and values study support (e.g., 45% want a personalized study coach). Offer Gen Z–targeted onboarding to AI study tools, while providing GenX+ with concise, confidence-building “how to start” resources and concierge support to reduce the “less than monthly” usage barrier (52% for GenX+).

Build AI literacy into the recruitment journey—not just the classroom.

Students are using AI before they ever apply. Offer short “AI in this program” explainers, acceptable-use statements, and sample assignments that demonstrate responsible AI use in your program pages, info sessions, and admit packets. This addresses the interest in on-demand advising (esp. among grad students) and sets expectations early.

Measure what matters: experience, not just clicks.

Given that most students rated chatbots as moderately to very effective, track time to answer, completion of task (e.g., booked info session, started application), and student satisfaction after each AI interaction. Use these signals to improve bot flows, content depth, and routing to humans when needed.

My Take: The data says students aren’t waiting for institutions to decide on AI—they’re already using it. For both students and staff, higher education has to set clear guardrails, make the right tools accessible, both during the recruitment process and while students are enrolled, and guide responsible use. Determining appropriate uses of AI in "business functions (marketing, recruitment, enrollment, etc.) is just as important as in curriculum. Institutions should bring AI into these all of these functions, while making clear that this is meant to streamline and enhance work, not eliminate anyone's job. AI is causing unparalleled rates of anxiety in the workplace, whether that be students worrying they will not GET a job, or employees who think they will be replaced by AI. A strong and steady focus on the ongoing need for experts - HUMAN EXPERTS - will go a long way toward bringing the anxiety level down and ensure that productivity does not suffer. Institutions that do this will improve operations, streamline support, and strengthen learning—without sacrificing either their academic or human integrity.